Web UI

MLModelScope uses OpenAPI (also referred to as Swagger) to define its REST API interface. OpenAPI is a language agnostic specification that is widely supported by many languages and has compiler to generate both client and server code for different languages. We use the swagger specification to generate our server code along with the JavaScript client code. Through the Swagger file, MLModelScope exposes a few REST API endpoints. These are the same endpoints used by the JavaScript client to interact with the system. MLModelScope’s Swagger file is located within dlframework.swagger.json.

Web Server

One can extend the web server of MLModelScope by updating the Swagger file.

The available OpenAPI specification can be used in conjunction with tools such postman to create requests. The Swagger UI (shown on above) can also be used to make REST requests.

To regenerate the swagger definition file, navigate to the rai-project/dlframework directory and type

make generate-protoThis will output a dlframework.swagger.json file.

To generate the swagger client and server files from the swagger.json definition type

make generateAfter updating the swagger file, one then adds the corresponding implementations for the services.

Web Clients

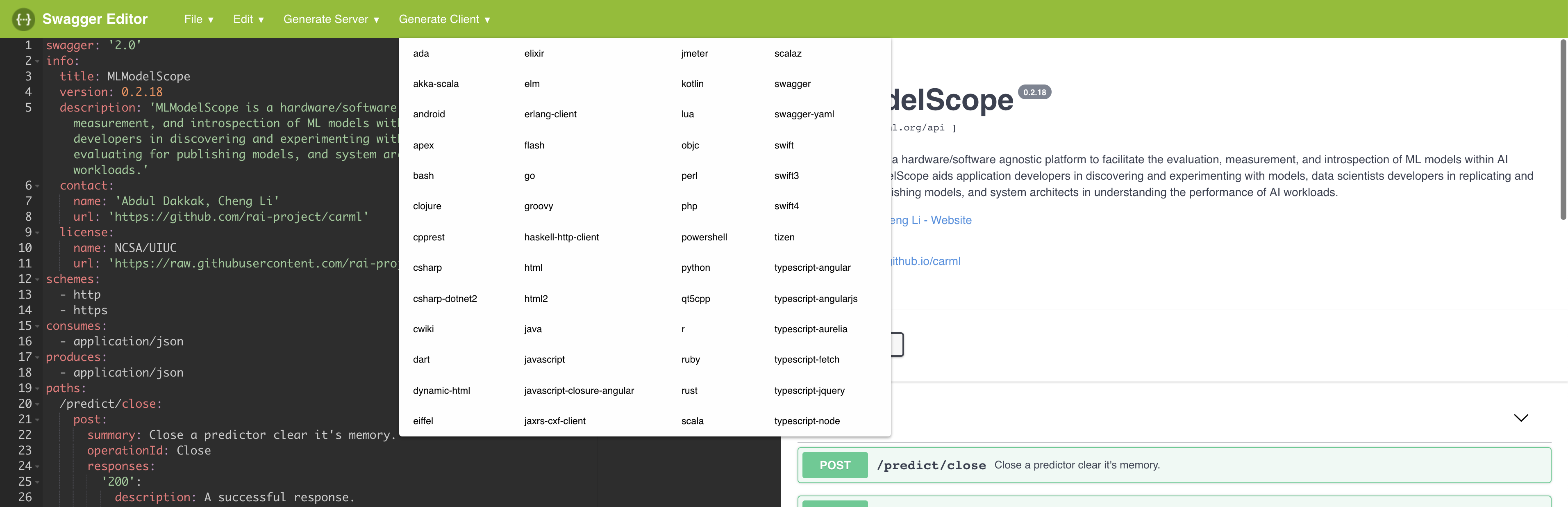

The Swagger editor tool can be used to generate the client code online. One just copies the Swagger file to the editor and the API is rendered

One can then choose one of the different client generators supported by the public editor (there are more unsupported versions found publicly).

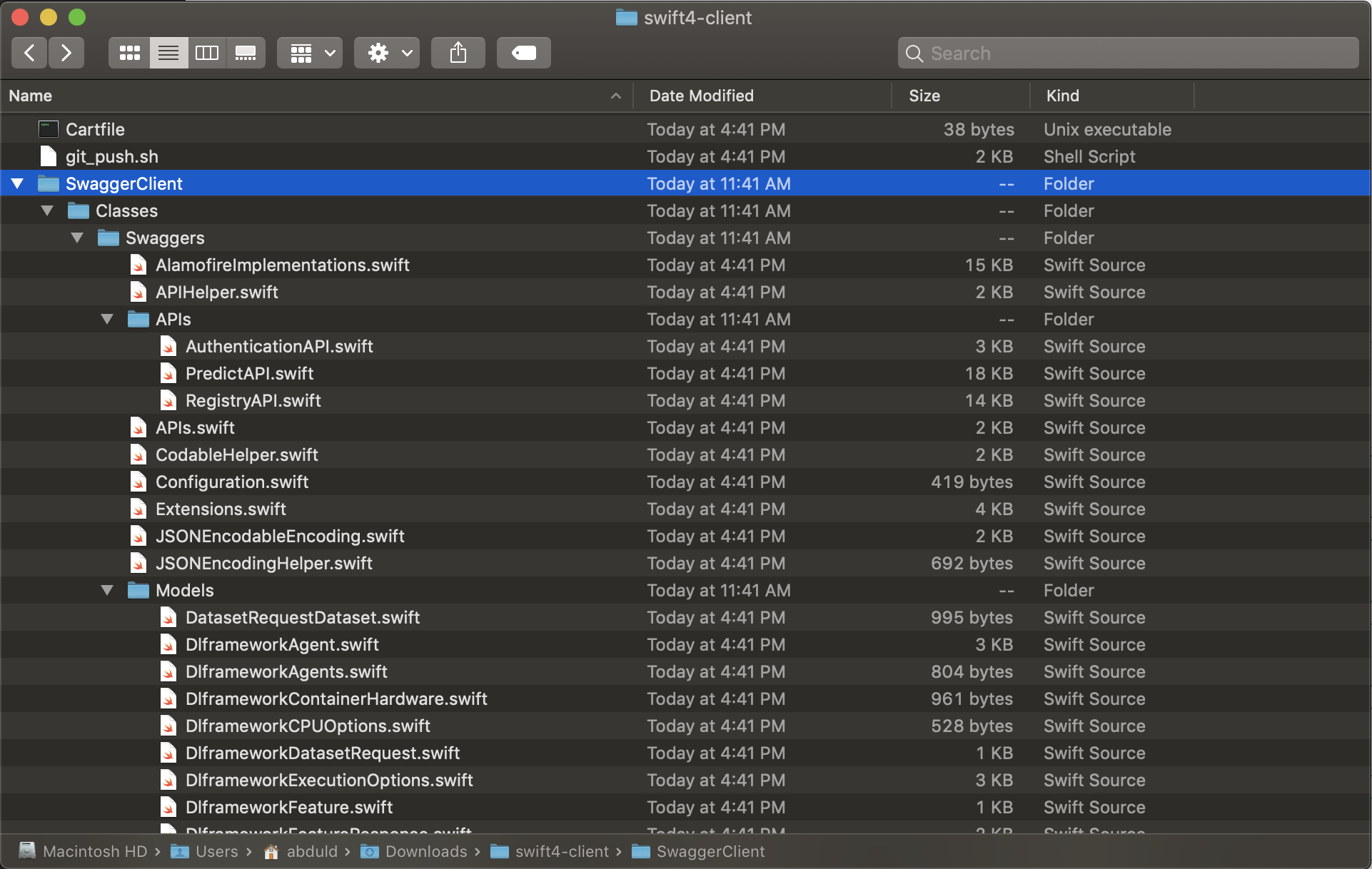

If we select Swift, then client code is generated which is archived and given to us for usage within our swift application.

The generated client and server projects are placed within the web folder in rai-project/dlframework.

One can use the swagger file to generate the client code in any language, but one can also implement it themselves easily. The code below shows an example Python client to interact with the web server. The logic is similar to using other language.

"""Console script for MLModelScope_python_client."""

import click

import requests

import chalk

import json

import uuid

import time

from urllib.request import urlopen

@click.command()

@click.option('--MLModelScope_url', default="http://www.mlmodelscope.org", help='The URL to the MLModelScope website.')

@click.option('--urls', default='example.txt', type=click.File('rb'), help="The file containing all the urls to perform inference on.")

@click.option('--framework_name', default='MXNet', help="The framework to use for inference.")

@click.option('--framework_version', default='1.3.0', help="The framework version to use for inference.")

@click.option('--model_name', default="BVLC-AlexNet", help="The model to use for inference.")

@click.option('--model_version', default='1.0', help="The model version to use for inference.")

@click.option('--batch_size', default=256, help="The batch size to use for inference.")

@click.option('--trace_url', default="trace.mlmodelscope.org", help="The URL to the tracing server.")

@click.option('--trace_level', default='STEP_TRACE', help="The trace level to use for inference.")

def main(MLModelScope_url, urls, framework_name, framework_version, model_name, model_version, batch_size, trace_url, trace_level):

"""Console script for MLModelScope_python_client."""

MLModelScope_url = MLModelScope_url.strip("/")

if not MLModelScope_url.startswith("http"):

MLModelScope_url = "http://" + MLModelScope_url

trace_url = trace_url.strip("/")

if not trace_url.startswith("http"):

trace_url = "http://" + trace_url

openAPIURL = MLModelScope_url + "/api/predict/open"

urlsAPIURL = MLModelScope_url + "/api/predict/urls"

closeAPIURL = MLModelScope_url + "/api/predict/close"

chalk.green("performing inference using " + MLModelScope_url)

click.echo("performing open predictor request on " + openAPIURL)

openReq = requests.post(openAPIURL, json={

'framework_name': framework_name,

'framework_version': framework_version,

'model_name': model_name,

'model_version': model_version,

'options': {

'batch_size': int(batch_size),

'execution_options': {

'trace_level': trace_level,

'device_count': {'GPU': 0}

}

}

}, allow_redirects=False)

openReq.raise_for_status()

openResponseContent = openReq.json()

predictorId = openResponseContent["id"]

click.echo("using the id " + predictorId)

lines = urls.readlines()

urlReq = [{'id': str(uuid.uuid4()), 'data': url.decode("utf-8").strip()}

for url in lines]

urlReq = requests.post(urlsAPIURL, json={

'predictor': openResponseContent,

'urls': urlReq,

'options': {

'feature_limit': 0,

}

},

headers={},

allow_redirects=False,

)

urlReq.raise_for_status()

urlsRes = urlReq.json()

traceId = urlsRes["trace_id"]["id"]

#print(traceId)

# print(urlReq.json()["responses"][0]["features"][:5])

requests.post(closeAPIURL, json={'id': predictorId}, headers=headers, allow_redirects=False)

print(trace_url + ":16686/api/traces/" + traceId)

if __name__ == "__main__":

main()