Design

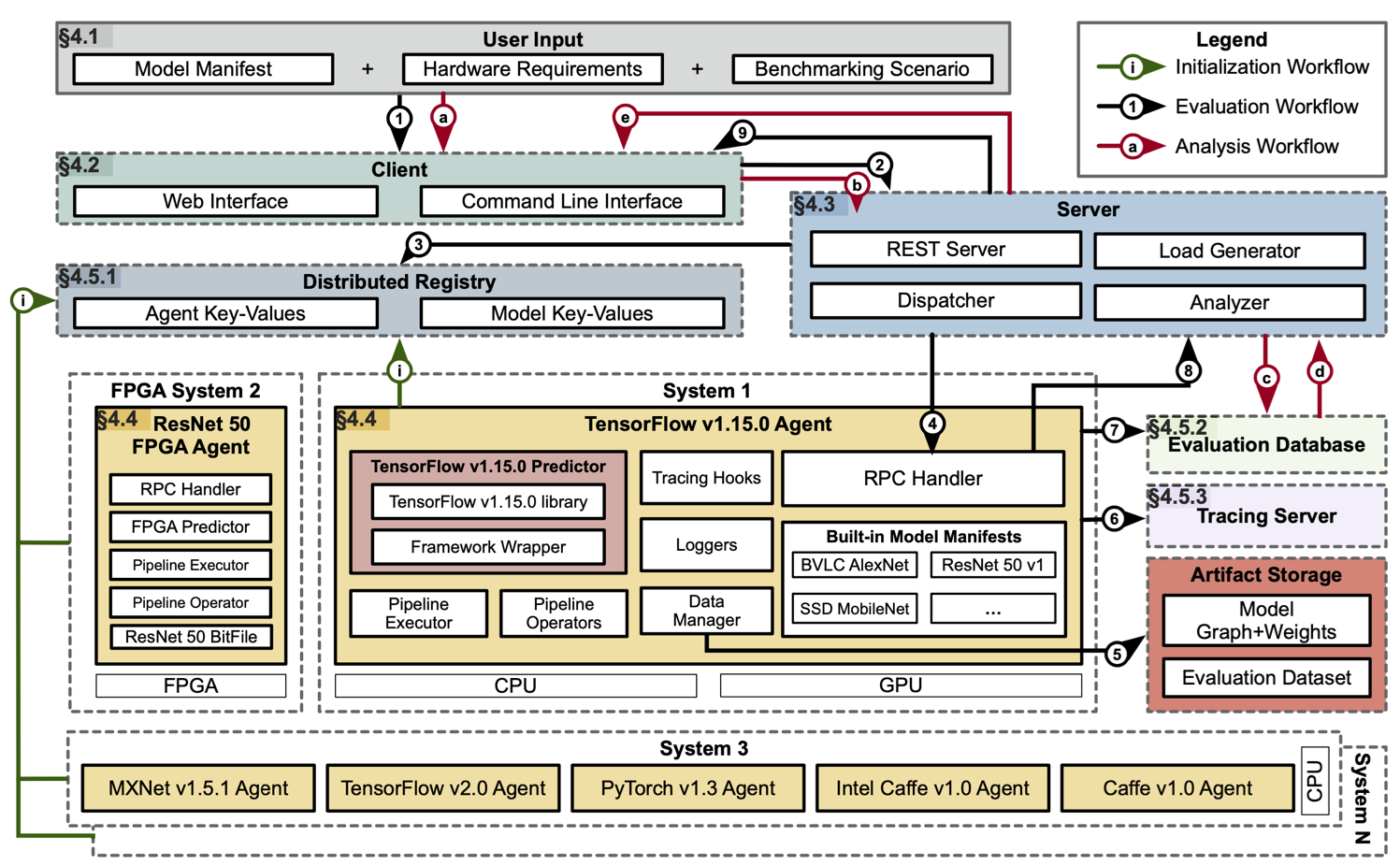

CarML deploys models to be either evaluated using a cloud (as in model serving platforms) or edge (as in local model inference) scenario. To adapt to the fast pace of DL, CarML is built as a set of extensible and customizable modular components:

- Client — is either the web UI or command line interface which users use to supply their inputs and initiate the model evaluation by sending a REST request to the CarML server.

- Server — acts on the client requests and performs REST API handling, dispatching the model evaluation tasks to CarML agents, generating benchmark workloads based on benchmarking scenarios, and analyzing the evaluation results.

- Agents — runs on different systems of interest and perform model evaluation based on requests sent by the CarML server. An agent can be run within a container or as a local process and has logic for downloading model assets, performing input pre-processing, using the framework predictor for inference, and performing post-processing. Aside from the framework predictor, all code in an agent is common across frameworks.

- Framework Predictor — is a wrapper around a framework and provides a consistent interface across different DL frameworks. The wrapper is designed as a thin abstraction layer so that all DL frameworks can be easily integrated into CarML by exposing a limited number of common APIs.

- Middleware — are a set of support services for CarML including: a distributed registry (a key-value store containing entries of running agents and available models), an evaluation database (a database containing evaluation results), a tracing server (a server to publish profile events captured during an evaluation), and an artifact storage server (a data store repository containing model assets and datasets).

The figure below shows the high level components and the workflows of MLModelScope. Refer to The Design and Implementation of a Scalable DL Benchmarking Platform for more details.

<!–

–>

The MLModelScope code base spans multiple repositories under github.com/rai-project.

MLModelScope Client and Server

MLModelScope Agents

All the agents are built on top of the common code dlframework.

Framework Go Bindings

To avoid overhead introduced by scripting languages, MLModelScope uses the frameworks’ C-level API through the Go bindings — consequently the model evaluation profile is as close to the hardware as possible.